I was making a quick project that require to scrape some information from a website. BeautifulSoup is the library of choice.

Download takes 1-2 seconds per page, with high network latency because the server is in US and I am in London.

After writing the downloader, it takes more like 4-5 seconds per page, which is noticeably slow. How come? Could it be that large HTML is slow to parse?

Profiling

How to debug performance? Using a profiler.

Looks like the PyCharm built-in profiler is only available in the Professional edition, which I don’t have at home, so have to improvise something else.

This gets the job done:

import cProfile

cProfile.run('download.main()', 'app.profile')

# install snakeviz package

# generate report by calling "snakeviz app.profile"

BeautifulSoup

soup = BeautifulSoup(r.content, 'http.parser')

# http.parser is a built-in HTML parser in python 3.

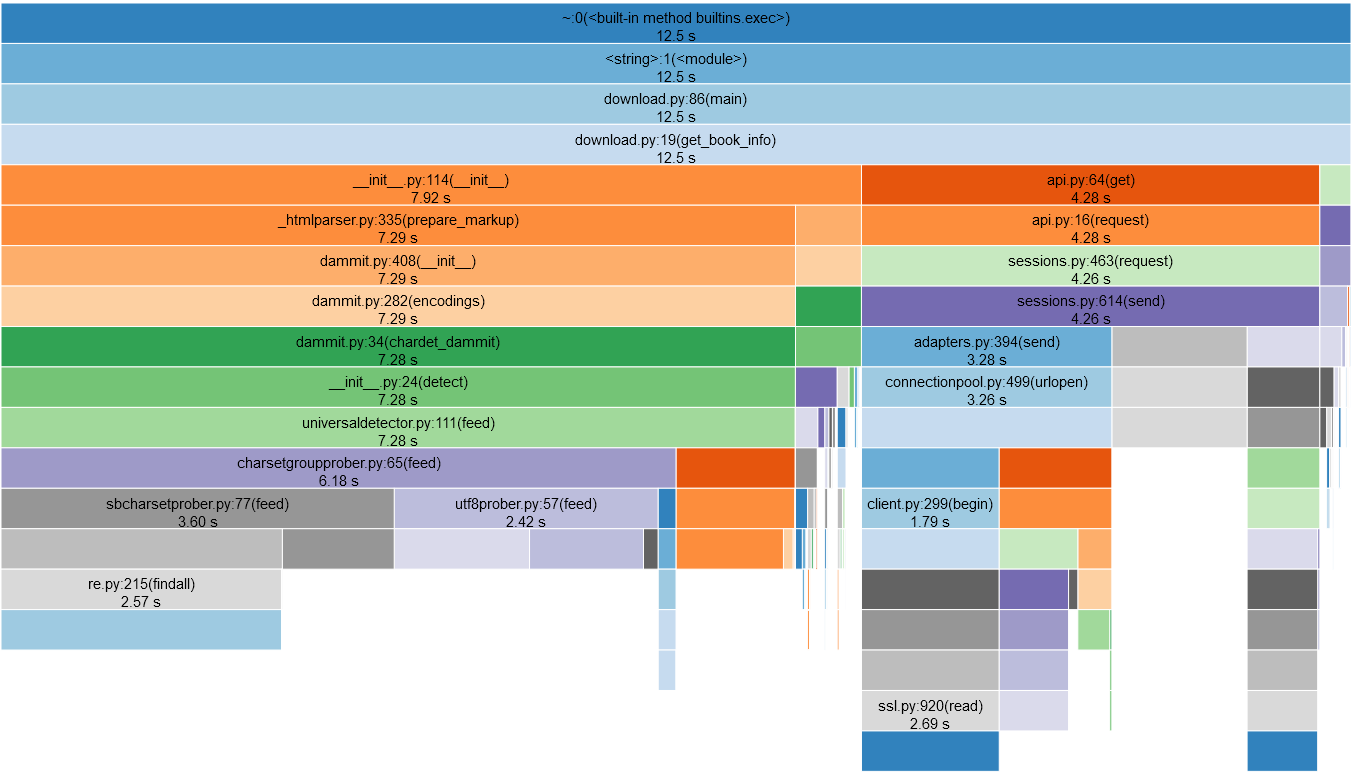

Translation:

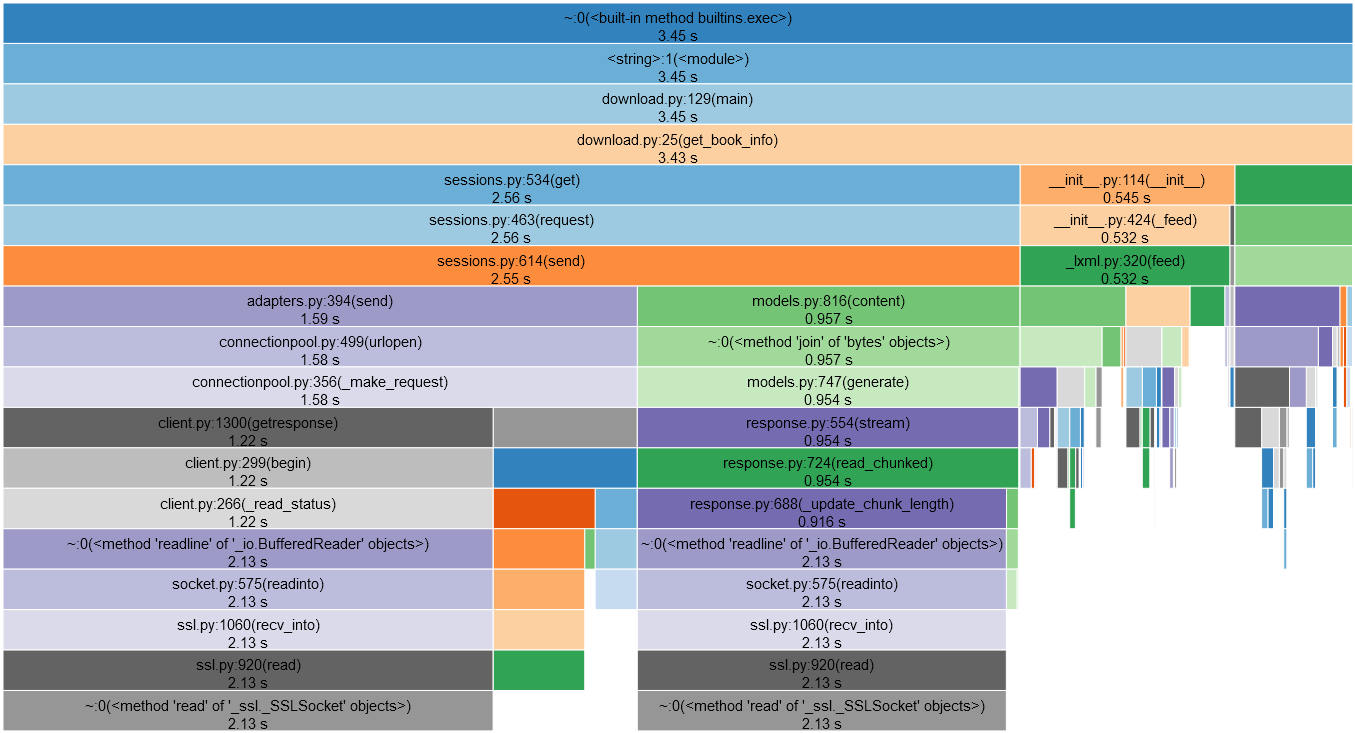

- 4.28 seconds to download 4 pages (

requests.api+requests.sessions) - 7.92 seconds to parse 4 pages (

bs4.__init__)

The HTML parsing is extremely slow indeed. Looks like it’s spending 7 seconds just to detect the character set of the document.

BeautifulSoup with lxml

A quick search indicates that http.parser is written in pure python and slow.

The internet is unanimous, one must install and use lxml alongside BeautifulSoup. lxml is a C parser that should be much much faster.

import lxml

[...]

soup = BeautifulSoup(r.content, 'lxml')

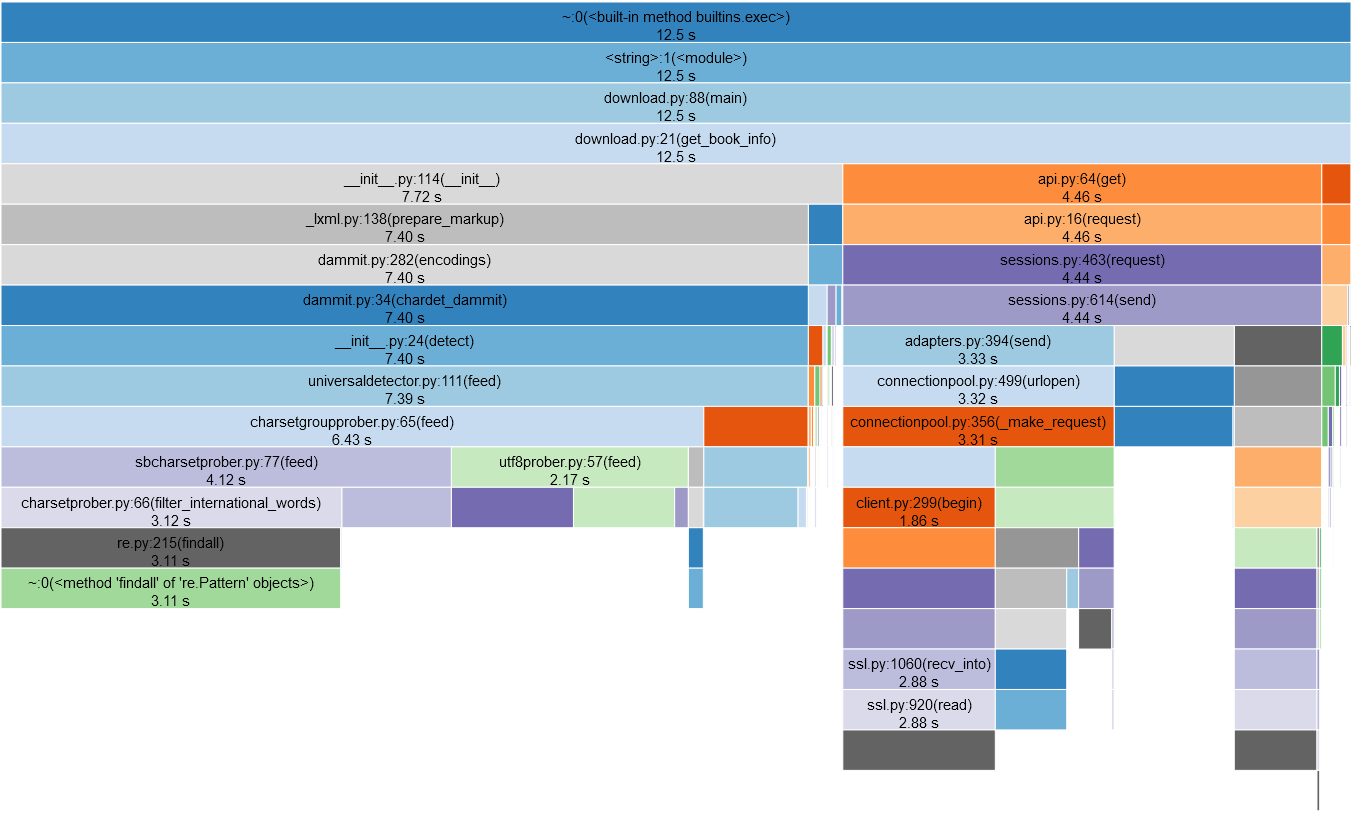

Install lxml…. Run… Get the same result.

Well, didn’t help.

BeautifulSoup with lxml and cchardset

Deep down in the google results, a link to the official documentation, with at the bottom of the page, a small section on performance (also advising to use lxml), including a hidden gem in the last sentence.

https://beautiful-soup-4.readthedocs.io/en/latest/#improving-performance

[…] You can speed up encoding detection significantly by installing the cchardet library.

import lxml

import cchardet

[...]

soup = BeautifulSoup(r.content, 'lxml')

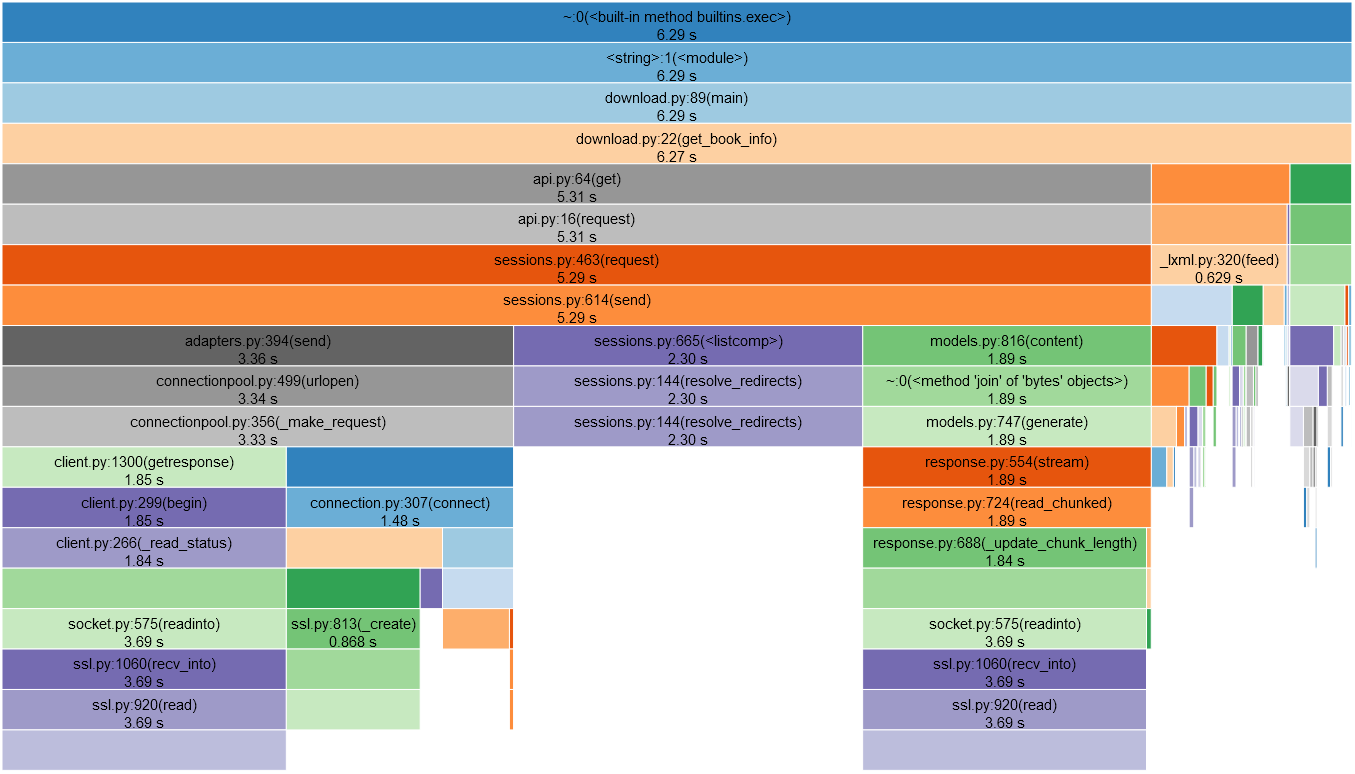

0.6 seconds to parse the page, down from 6 seconds.

That is 10 times faster. Great!

Hence the problem was the character detection.

Pooling Connection

Now… what was this session thing taking all the time? Is it normal?

5.3 seconds in requests.sessions with 3.3 in connection/adapter things. 🤔

Looks like python requests.get() is opening a new connection for each request. There is a whole second of latency between the local computer and the server in the US.

Let’s try to re-use connections. Easy enough with requests.

requests_session = requests.Session()

[...]

r = requests_session.get(url)

2.6 seconds to download the page, down from 5.3 seconds. Much better!

The test script is downloading 4 pages. Meaning there was 0.9 seconds of overhead to initiate each new TLS connection to the US.

Lessons Learned

Use BeautifulSoup with lxml and cchardset.

Also, reuse the connection.

The goal is to achieve about one request per second. It’s fast enough for this application with no risk of DDoSing the server.

Thanks for sharing how to use a profiler with python.

LikeLike

Really appreciate this, was experiencing very slow scraping and this significantly sped up the process.

LikeLike

Holy flamingoes, this is fantastic. Thank you kind sir ❤

LikeLike