TL;DR: 1) You should be aware that the Anaconda ecosystem is no longer free to use and 2) Anaconda Inc has started aggressively pursuing companies and 3) This is the start of some of what may become some of biggest cases of the decade on using unlicensed software (copyright violation / contractual violation).

What is Anaconda?

Anaconda was a software company providing the Anaconda ecosystem. It’s a collection of software and tools around python and R and associated libraries that are ready-to-use for developers and researchers.

- Anaconda Inc was opened circa 2012, under the name Continuum Analytics.

- Before 2020, the Anaconda ecosystem was free to use with no limitations.

- In 2020, Anaconda unilaterally changed the licensing terms to restrict commercial usage.

- In 2024, Anaconda unilaterally changed the licensing terms to remove exceptions that allowed to download (and use) Anaconda and Conda.

- In 2024, Anaconda unilaterally changed the licensing terms to restrict usage in non-profit, research, education and government institutions, which were previously covered by exception.

To summarize, Anaconda are Miniconda and conda are no longer free to use, the mere download of Anaconda or Miniconda is now considered unlicensed usage and you will be sued out of existence… unless you are an individual user or within a small list of vanishing exceptions.

Anaconda is –or used to– be big. There are millions of python developers around the world and most of them probably used Anaconda at some point, knowingly or unknowingly. I’d venture to say that all large companies used anaconda or a part of its ecosystem at some point. There is a lot of usage in research institutions and universities, I think it’s fair to say that a lot of simulations around biology and physics and weather were or are running on the anaconda ecosystem.

There is very few software that swapped overnight from free to paid like Anaconda, there is very few software that had the reach and adoption of Anaconda.

Problem: You can’t use it anymore

This promises some of the greatest and most remarkable lawsuits of the decade. They have the grounds and the means to enforce their license. Now they are finally suing companies left and right to claim back 4 years of license fees and it’s going to be awesome!

Cases

You can find the cases on court listener https://www.courtlistener.com/?type=r&q=anaconda%20inc&type=r&order_by=score%20desc

I will give a link when possible. A few of the documents have been released for public consumption. You can request all documents from PACER (the US court website). It costs a few pounds per document and there are hundreds of documents filled so far.

EDIT: Some of the cases were initially not available when I started drafting this blog post a few days ago. I notice that new documents are available now. I’ve been pinging few people here and there. There may be some movement happening.

- Anaconda vs Intel, case started on 8th August 2024. This one had a bit of coverage in the media but the coverage was poor. https://www.courtlistener.com/docket/69029637/anaconda-inc-v-intel-corporation/

- Anaconda Inc vs Dell, case started on 12th December 2024 https://www.courtlistener.com/docket/69462510/anaconda-inc-v-dell-inc/

- Alibaba Cloud vs Anaconda, case started on 30th September 2024 https://www.courtlistener.com/docket/69213889/hangzhou-jicai-procurement-co-ltd-v-anaconda-inc/

- Airbus SAS vs Anaconda, case started on 13th February 2025 https://www.courtlistener.com/docket/69640674/airbus-sas-v-anaconda-inc/

Dell

You can find the initial complaint here, 24 pages https://storage.courtlistener.com/recap/gov.uscourts.txwd.1172816953/gov.uscourts.txwd.1172816953.1.0.pdf

It’s quite readable. If you want to have a good laugh and a good story to start the day, I’d recommend reading from page 14 point 46 until a few pages later.

It describes how Dell has been using the unlicensed software for years despite getting repeated notices of violation from Anaconda. Anaconda cut access to their software, then Dell pretended to ask for a license and Anaconda restored access, then Dell wasn’t interested in paying anymore, then Anaconda cut again access, and back and forth.

I’m looking forward to hear more about this case.

Airbus

You can find the initial complaint here, 18 pages https://storage.courtlistener.com/recap/gov.uscourts.ded.88233/gov.uscourts.ded.88233.1.0.pdf

This one is the other way around; it’s Airbus who sued Anaconda for a Declaratory Judgment to drop the case.

It’s quite readable. You can start on page 5 point 19. It describes the history of the ecosystem, the gradual changes to the license terms, and the incoming lawsuits.

From page 10 point 39, I think it raises a lot of interesting points like Anaconda leaving open access to their repositories for years, fully aware that companies were continuing to use and download its software without a license, yet making no attempt to restrict access.

Per the document page 11, it answers the question of how much Anaconda Inc is trying to squeeze out of the lawsuits. <<The amounts demanded in subsequent follow-on letters [from Anaconda to Airbus] have varied by orders of magnitude ranging between six- and eight-figures.>>

For their defense, Airbus claims that Anaconda is a collection of thousands of free and open-source packages by authors other than Anaconda, Airbus claims that Anaconda distribution is not copyrightable and asked to drop the case.

I’m looking forward to this case.

P.S. If I were advising Airbus, in addition to Anaconda leaving open access, I would strongly advice to raise the issue of Anaconda Inc unfairly continuing to benefit from the ecosystem falsely being promoted as always free and raise the issue of Anaconda Inc making no effort to correct the false information. For example, there are countless of answers on stack overflow promoting anaconda/miniconda/conda as free, either in direct or implies terms. Some of the content was probably written by employees of Anaconda Inc. The false information continues to mislead thousands of people daily and drive them into license violation, including developers at Airbus and other affected companies.

Alibaba Cloud

You will be able to find the initial complaint here once the documents are added. In the meantime it’s only accessibly on PACES with a fee around $3 per document.

https://www.courtlistener.com/docket/69213889/hangzhou-jicai-procurement-co-ltd-v-anaconda-inc/

It should be similar as airbus; it’s Alibaba Cloud / Hangzhou Jicai Procurement who sued Anaconda for a Declaratory Judgement to drop the case.

Intel

https://www.courtlistener.com/docket/69029637/anaconda-inc-v-intel-corporation/

1) Initial complaint, Anaconda Inc suing Intel, 17 pages https://storage.courtlistener.com/recap/gov.uscourts.ded.86584/gov.uscourts.ded.86584.1.0_1.pdf

You can start reading the initial complaint on page 11 item 30.

Intel used to pay for Anaconda licenses, until Intel stopped renewing the license at one point. Anaconda claims that Intel continued to use the software and created and distributed derivative works. Anaconda claims that Intel promoted unlicensed usage of Anaconda directly and through Intel channels and Intel derivative works. There are many claims and it’s very long. Too long to summarize.

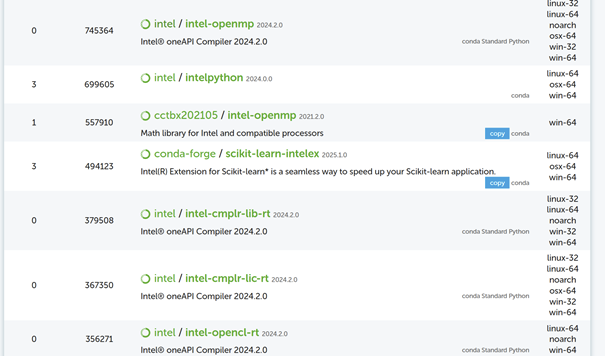

At some point in 2024, Anaconda Inc unilaterally shut down all Intel distribution channels hosted by Anaconda. https://anaconda.org/intel

You can still find some of the content in searches but the content is inaccessible.

Item 32. << Second, on information and belief, Intel, without Anaconda’s authorization, reproduces, creates derivative works based on, and distributes Anaconda’s Proprietary Components and other protectable elements of the Anaconda Distribution and Anaconda’s conda-compatible packages by distributing Intel’s “AI Analytics Toolkit,” or “AI Kit.” Intel advertises its AI Kit as providing “End-to-End Python Data Science and AI Acceleration” with “[p]roducts [that] are grouped to meet common AI workloads like machine learning, deep learning, and inference optimization,” and that users “can customize them to choose only the tools you need from conda repositories.” >>

Please keep this item in mind (items 32 to 37). This is about to become extremely important.

2) Answer to complaint by Intel, 12 pages https://storage.courtlistener.com/recap/gov.uscourts.ded.86584/gov.uscourts.ded.86584.14.0.pdf

You can start reading the answer on page 5.

Intel claims that Anaconda is not clearly defined and hence not covered by the asserted Copyright. The document has the same answer replied dozens of times. << Intel denies that the term “Anaconda Distribution” is clearly defined in the Complaint or elsewhere, and Intel thus denies based on lack of knowledge and information that the “Anaconda Distribution” is protected by the Asserted Copyright. >>

Then Intel tries to get the case dismissed as they relied on the assertion that Anaconda software were distributed and promoted as “always free” and Intel relied on this representation.

The Idiocy of the Intel Case

While the case against other companies have some merits, the case against Intel is very peculiar because Anaconda would not exist without Intel.

Anaconda is actually a copy and redistribution of Intel software, namely the Intel MKL Math Kernel Library, without which Anaconda would not exist.

Why aren’t there any claim explaining that?

A bit of history. https://stackoverflow.com/a/68897551/5994461

Fact of the matter, Anaconda was founded in 2012 (previous name Continuum Analytics) to make it easier to use python with math and data science libraries. At the time, python and associated software (numpy, pandas, scikit and math libraries) were only available in source code form. For non-tech people you can think of it like “blueprints” which are not usable as-is. They need to compiled and bundled to generate software in executable form that can be run. It’s a complex and very involved task, especially if you want to make software that can work reliability across numerous machines and/or machines of a different nature (Windows, Linux, Mac, Intel x86, AMD x64, ARM, etc…).

Intel was the provider of hardware and CPU, billions of CPU that are used in home computers, laptops, servers and compute grids to run simulations (these last two are the main target customers of anaconda). In addition to selling the hardware, Intel provided software and tools that allowed to perform and distribute math operations on said hardware. Namely the Intel MKL Math Kernel Library, renamed later to OneAPI MKL.

Anaconda, both the company and the software with the same name, was only created to combine and distribute the python interpreter + the Intel MKL library + python data science libraries built on top of the Intel MKL (numpy, pandas, scikit, etc…) + a variety of common libraries, in a ready-to-use form that can be run on most computers.

In essence, the sole purpose of existence of Anaconda is to distribute Intel software and more software built around it, without which Anaconda would not exist!

In 2012 there wasn’t any other way to run all these software and tools. You had to build everything from source yourself. There wasn’t any viable alternative to using the Intel MKL. In fact, Anaconda was openly advertised as being built upon Intel libraries, which was a major selling points to users and companies using Intel hardware.

In 2016, python finally rolled a package manager, namely pip and wheel packages, which allowed to package and distribute software for the python ecosystem in usable form. It took a few more years to pick up and gain traction.

By 2020, Anaconda was getting obsolete in my opinion. It was no longer necessary as you could obtain most software directly with python/pip. However that is not to say it was useless and without value, Anaconda did provide a vast collection of software and libraries, that was ready to use out-of-the-box and available together and compatible together. Doing it yourself, which most large companies and research institutions do, takes quite an investment and effort.

Thankfully for Anaconda Inc, by 2020, the world saw the rise of GPGPU computing driven by NVIDIA (CUDA). Software could run maths and simulations on NVIDIA graphic cards (GPU) rather than Intel/AMD processors (CPU). Once again, the libraries and tools to use GPU came in variable forms and it required quite the effort to get anything usable out of them. Once again, Anaconda could step in to build and package and distribute GPU libraries in usable form. This is mostly a story around NVIDIA/CUDA that I should cover in separate blog post. Intel happens to sell both CPU and GPU. (While I mostly focused on the MKL that is the very first building block of the Anaconda ecosystem, there are certainly a few more Intel software that the Anaconda ecosystem very much uses or needs).

Long story short, as of today Anaconda still exists to bundle and package distribute Intel software. Anaconda does not exist without Intel.

You can download anaconda if you’re an individual outside of a company. You can run “conda list” to see that it is using the Intel MKL library. You can run “numpy.show_config()” and “scikit.show_config()” to see that these are built upon the intel MKL.

It is absurd for Anaconda Inc to sue Intel. It is absurd for Anaconda Inc to claim that Intel was derivative work of Anaconda Inc software, when it’s Anaconda that is derivative work of Intel libraries.

I can’t believe there is some idiot in Anaconda Inc who thought they’d make an example of Intel. Anaconda Inc sued Intel as their very first target, no less. The balls on these guys!

I can’t believe Intel did not bring that up in their counter claim. Intel lawyers paid thousands of dollars in few minutes increment, and they can’t notice the joke of being asked to paid for their own software. (In fairness, the engineers who were developing and testing Intel libraries with Anaconda/Miniconda/Conda for their mutual benefits should have highlighted the idiocy of the situation :D)

Needless. I am very curious to see how the case against Intel will develop.

When will Intel wake up and fight back?

I’d like to see Intel wake up and fight back.

The Intel MKL is distributed under the Intel MKL license, which allows free usage and redistribution. It’s a license with many clauses to protect Intel and the larger ecosystem. Anaconda Inc. has agreed to all these terms.

- See on Intel website: https://www.intel.com/content/www/us/en/developer/articles/license/end-user-license-agreement.html

- Download PDF on Intel website: https://cdrdv2.intel.com/v1/dl/getContent/749362

- See a copy on GitHub: https://github.com/rust-math/intel-mkl-src/blob/master/License.txt

1) There is an indemnity clause in some versions of the Intel MKL license: <<YOU AGREE TO INDEMNIFY AND HOLD INTEL HARMLESS AGAINST ANY CLAIMS AND EXPENSES RESULTING FROM YOUR USE OR UNAUTHORIZED USE OF THE SOFTWARE.>>”

Anaconda Inc is trying to cause harm to Intel, for using and developing the Intel MKL library, that Anaconda uses and redistribute to make Anaconda. Anaconda Inc is trying to cause harm to Intel by promoting and distributing the Intel MKL only to then attack any user who will make use of it through Anaconda.

I’d love to see Intel invoke their indemnity clause. I think there is reasonable grounds for Intel to claim indemnity from Anaconda for all damages and legal costs that arise from the lawsuit between Anaconda Inc and Intel.

This would be very interesting to see. I don’t think there is any precedent where any (technology) company has invoked an indemnity clause, probably because there are very few licenses with an indemnity clause and there are no software company that ever tried to shut down the hardware/software company they rely on (because it would be an incredibly stupid thing to do!).

2) There is a termination clause in the Intel MKL license: << Termination. Intel may terminate your right to use the Software in the event of your breach of this Agreement and you fail to cure the breach within a reasonable period of time.>>

I’d love to see Intel send an injunction to Anaconda to immediately stop using and distributing Intel software.

There are some limits to withdraw a license (see estoppel in US law) otherwise it would be chaos if companies could cancel licenses at any point for any reason. In principle, the withdrawal will come into effect after a reasonable time after notice is given and it can only affect future usage.

Considering the very unique situation 1) Anaconda Inc is actively attacking Intel causing them harm 2) Anaconda Inc is actively attacking users of the Intel ecosystem causing them harm 3) Anaconda Inc unilaterally shutdown the repository hosting Intel software without notice, which existed for the benefit of Anaconda and Intel and their mutual users 4) Anaconda Inc is actively benefiting and promoting itself as a distribution of the Intel libraries to gather users, only for Anaconda to subsequently demand licensing fees from users (including against Intel itself), despite having no ownership of the Intel software.

I think there is more than reasonable grounds for Intel to step in and withdraw the MKL license to Anaconda Inc.

This may have the side effect of shutting down Anaconda Inc as they cannot exist without Intel software. Oops.